In previous posts, I’ve explored how to use Bing Maps with SQL Server spatial data. In this post, I want to explore the Bing Maps control a little more. Specifically, I want to look at how to use the Bing Maps control to geocode a street address (that is, find the latitude and longitude coordinates of the address), and how to reverse-geocode a spatial location to find the corresponding street address.

The first step in building a Web page that uses the Bing Maps control to geocode an address, is to add a <div> tag to host the map control and use the page body’s onload event to call a JavaScript function that loads the map – like this:

<head>

<!-- add a reference to the Virtual Earth map control -->

<script type="text/javascript"

src="http://dev.virtualearth.net/mapcontrol/mapcontrol.ashx?v=6.3">

</script><script type="text/javascript">

function GetMap() {

map = new VEMap('mapDiv');

map.LoadMap();

}

</script></head>

<body onload="GetMap()"><div id="mapDiv" style="position:relative; width:600px; height:400px;">

</div>

</body>

Next, you need a textbox so that users can enter the address they want to geocode, a button they can click to geocode the address, and two textboxes to show the resulting latitude and longitude coordinates.

Address:<input id="txtAddress" type="text" style="width:340px" />

<input style="width:60px" id="btnFind" type="button" value="Find" onclick="return btnFind_onclick()" />

Latitide:<input id="txtLat" type="text" style="width:400px" />

Longitide:<input id="txtLong" type="text" style="width:400px" />

Note the onclick property of the button control, this calls the function that uses Bing Maps to geocode the address. Here’s the code to do that:

function btnFind_onclick() {

//Geocode the address to find the Lat/Long location

map.Geocode(document.getElementById("txtAddress").value, onGeoCode, new VEGeocodeOptions())

}

Note that the code in the btnFind_onclick function calls the Geocode method of the map control, specifying the address to be geocoded, the name of the callback function to use to process the results (onGeoCode), and a VEGeocodeOptions object that ensures the user is shown a list of options when the address has multiple possible matches. The calback function looks like this:

function onGeoCode(layer, resultsArray, places, hasMore, veErrorMessage) {

var findPlaceResults = null;// verify the search location was found

if (places == null || places.length < 1) {

alert("The address was not found");

}

else {

// we've successfully geocoded the address, so add a pin

findPlaceResults = places[0].LatLong;

addPinToMap(findPlaceResults);

}

}

The callback function is called when the geocode method returns, and assuming a location has been found the JavaScript calls the following addPinToMap function to display the results:

function addPinToMap(LatLon) {

// clear all shapes and add a pin

map.Clear()

var pushpoint = new VEShape(VEShapeType.Pushpin, LatLon);

map.AddShape(pushpoint);// center and zoom on the pin

map.SetCenterAndZoom(LatLon, 13);// display the Lat and Long coordinates

document.getElementById("txtLat").value = LatLon.Latitude;

document.getElementById("txtLong").value = LatLon.Longitude;

}

This adds a pin to the map and centers and zooms to ensure it can be seen clearly. It then displays the latitude and longitude in the textboxes defined earlier.

We now have all the code required to geocode an address, but what about the opposite? Ideally, we also want the user to be able to click a location on the map and reverse-geocode the point that was clicked to find the address.

Of course, the Bing Maps map control already responds to user clicks, so our application will use right-clicks to enable users to specify a location. We’ll do this by attaching an onclick event handler to the map control in the GetMap function (which you will recall is called when the page loads to display the map), and then checking for a right-click before reverse-geocoding the clicked location:

// added to the GetMap function

map.AttachEvent("onclick", map_click);

function map_click(e) {

// check for right-click

if (e.rightMouseButton) {

var clickPnt = null;// some map views return pixel XY coordinates, some Lat Long

// We need to convert XY to LatLong

if (e.latLong) {

clickPnt = e.latLong;

} else {

var clickPixel = new VEPixel(e.mapX, e.mapY);

clickPnt = map.PixelToLatLong(clickPixel);

}// add a pin to the map

addPinToMap(clickPnt)//reverse-geocode the point the user clicked to find the street address

map.FindLocations(clickPnt, onReverseGeoCode);

}

}

This code finds the latitude and longitude of the clicked location (in some views, the map control uses X and Y pixel coordinates so we need to check for that), displays a pin on the map at the clicked location, and then uses the FindLocations method of the map control to find the address. A callback function named onReverseGeoCode is used to process the results:

function onReverseGeoCode(locations) {

// verify the search location was found

if (locations == null || locations.length < 1) {

document.getElementById("txtAddress").value = "Address not found";

}

else {

// we've successfully found the address, so update the Address textbox

document.getElementById("txtAddress").value = locations[0].Name;

}

}

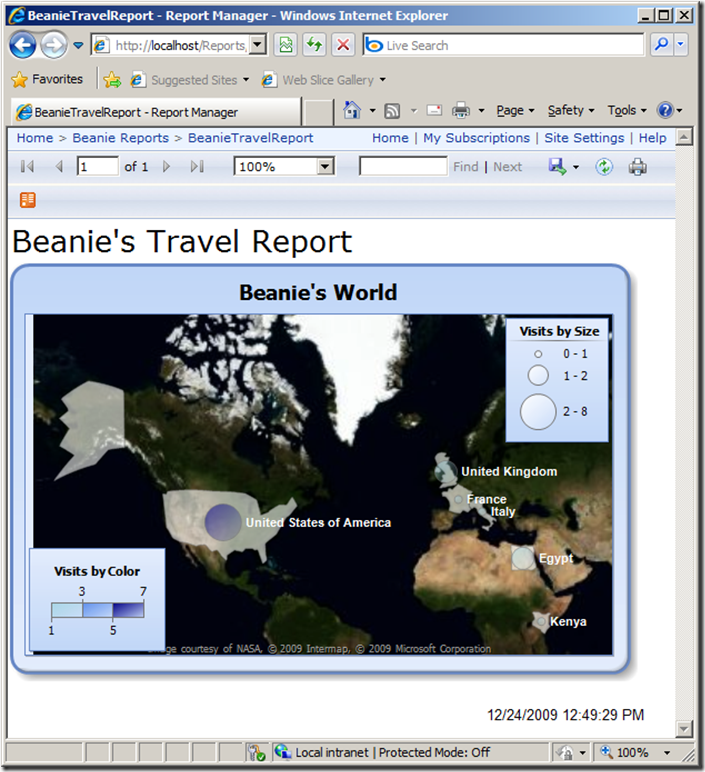

The completed application looks like this:

You can try the page out for yourself here, and you can download the source code from here.